Every Interaction Is a Turing Test: How to Make Sure You Still Sound Human in the Age of AI

• AI Communication

Once a day I read an email or LinkedIn post and instantly get the, "They used AI to write this," vibe. Using AI is great, and you definitely should be doing so, but once something gives off that "AI feel," I know at least in my case I'll likely scan the rest without reading too deeply. That's partly because I'm going to assume the writer didn't add much thought beyond what an AI could have generated, meaning any critical nuance, details, and analysis likely isn't there. It's funny, too, because our tolerance seems to shift based on who we think is behind the keyboard. If we know we're interacting with a clearly labeled chatbot, we might forgive some stiffness or generic phrasing, we adjust our expectations. But if we suspect a human colleague or contact simply copy-pasted an AI response without adding their own effort or insight? That feels different. It comes across as lazy or impersonal, the modern equivalent of knowing someone just Googled an answer and pasted the first result without engaging. That perceived lack of human effort is often more jarring than the robotic phrasing itself.

It's a tricky balance, and honestly, there isn't an easy shortcut that I know of yet. To do it right, you still need to invest significant time into the manual human part. That means actually reading the drafts yourself, making specific edits, clarifying vague points or adding crucial context the AI missed, and actively guiding the AI with better prompts and iterative feedback. It's not always fun, but it's necessary if you want the output to truly connect.

As AI-powered tools become a standard modern convenience, the line between human and machine-generated text is blurring. But there's still an unmistakable authenticity gap, and my gut tells me what we're feeling comes down to how humans and AI predict and produce language differently.

Let's explore why this gap exists, why our brains seem so good at noticing it, and how you can use this knowledge to make AI your assistant, not just your ghostwriter.

Why Context Matters: Audience Design in Action

When humans talk or write, we don't just string together the most predictably common words. We adapt constantly. We switch tone, change vocabulary, and add in jokes or asides based on who we're talking to, what we want to say, and how we're feeling at the moment.

Linguists call this audience design. We instinctively adjust our language for our listener and context. You've probably experienced this when a significant other or friend suddenly picks up a work call and drops into their "work voice." It feels strange because those words and that formality don't match your usual shared context. The words are still human, and completely appropriate for the situation, but something about it is jarring to you.

Sometimes, imperfections are what make communication feel real. Years ago in Japan, I booked a hotel room online. The smoking preference form gave me these options: "Yes," "No," "Any of the Above," or "None of Any of the Above." It made no logical sense, but I loved its quirkiness. That little "lost in translation" moment felt so unmistakably human that I booked the hotel anyway. The same goes for emails. A typo in a resume isn't ideal, but in a quick message? It's often just a reminder that a real, imperfect person wrote it. (I'd even argue a human-induced typo might be preferable to a reader over yet another flawless, AI-generated list of highlights - and as a result we might see these start slipping in on purpose)

AI Writing: The Statistical Plains

Large Language Models work differently. They're next-word predictors trained on billions of sentences from books, websites, and forums. They learn which words commonly follow others but don't genuinely understand context the way people do.

Here's the catch: when you average out a billion voices, the result is often fluent but bland. It becomes a kind of statistical middle ground. AI tends to choose safe phrases and structures common in its dataset. That's why AI-generated emails often start with "Thank you for reaching out…" or frequently use words like "delve" and "moreover," or seem overly fond of formal punctuation.

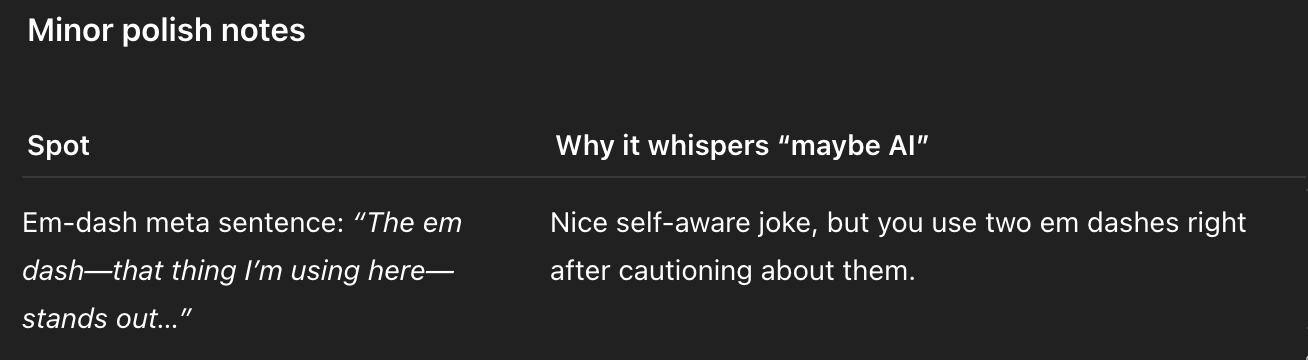

The em dash, that thing I'm using here, stands out as an example. Why does AI love it? My guess is that em dashes are common in books and essays, which make up a huge chunk of its training data. But when people type emails or blog posts, an em dash is sort of hard to type out, so we usually go with a hyphen or comma. AI simply copies the pattern it sees most often, resulting in writing that feels oddly literary where it doesn't belong.

It's not that AI can't be creative; it just doesn't have lived experience or intent. Its mistakes aren't endearingly quirky; they're usually just generic or too polished. It's like always seasoning with salt but laying off the spice so that "everyone will like it."

Why AI Sometimes Sounds Like an Out-of-Place Newscaster

This tendency towards formality often leads to a significant context mismatch. Most models learn by consuming vast libraries of text like books, articles, and encyclopedias, much of which is formal and polished.

It's a bit like using a "newscaster voice," that highly polished, non-regional, authoritative tone perfect for delivering headlines to thousands. But imagine using that same voice in a normal social interaction - it's so absurd that it's a huge part of the premise of the movie Anchorman: The Legend of Ron Burgundy.

That's what happens with AI writing too, albeit less comedic. The model echoes the polished styles from its training data. That's why so many AI emails or blog posts sound like they're addressing thousands, even when you just need to help one frustrated customer.

The main thing to remember is that language is about fitting the context, not just following rules. If you want your AI-generated writing to resonate, you need to help it break out of "book mode" and into "conversation mode" by providing context, style cues, and your own human touch.

Why Does AI Writing Feel So Bland? (Or Even Uncanny?)

If there's one thing AI nails with all that training data, it's acting like someone getting ready to snuggle up with a shareholder report. The issue isn't always that it doesn't sound human; it's that it sounds like a very specific kind of human in a very specific, often formal, context. That's great for an op-ed or memoir, but for everyday communication? It often doesn't hit the mark.

Our brains pick up on this instantly. When everything sounds polished and generic, when there's no sign anyone thought about what you're reading. You tune out because you know nothing surprising is coming. It isn't uncanny so much as sterile. It reads like the uniform beige panel drop-in ceilings you see in offices.

Image source: Wikimedia Commons

To flip this around: Imagine training an AI exclusively on corporate emails (the endless "per my last message," "circling back") and then asking it to write a novel. You'd likely get chapters that read like HR policies. The words would be "human," sure, but they'd be the right words used in the wrong way.

So really, it's all about context: not just sounding human, but sounding like the right human for the situation.

Can AI Ever Sound Truly Human?

Researchers are actively working on this. They're building models that remember context, integrate images and sounds ("multimodal learning"), and even simulate intent. Progress is happening, but a fundamental gap remains: AI lacks goals, memories, and real experiences.

AI can vary its output if prompted correctly, but it doesn't truly understand why. It's missing the intuition to naturally shift from its "annual report" voice to its "socializing with a friend" voice.

For now (and probably for a long while yet), bridging the authenticity gap means actively engaging with AI drafts rather than relying solely on the generated text.

How to Make AI Writing Less Bland (and Actually Useful)

Here's where things get practical. With a little effort you can harness AI's strengths like speed and breadth of knowledge, while staying human. Here are some tips:

Set Context and Voice Clearly. Clarify who is writing, who they are writing to, and why: "You're a support rep writing a friendly email to a frustrated customer." Try to nudge the model out of its default "white paper" mode by being explicit about the desired tone.

Request Rhetorical Devices and Emotion. Ask explicitly for human touches like humor or personal stories to make the writing more engaging and relatable, but be careful to not let the AI come up with your personal story, otherwise it'll probably hallucinate something. Usually asking it to ask you about personal stories that might be relevant will get you what you need.

Provide Style Examples. Paste a paragraph in your desired style and ask the AI to continue similarly. Showing is often more effective than telling.

Adjust AI Settings (Carefully). If possible, slightly increase the randomness (often called the "temperature" setting) to break repetitive patterns, but be cautious not to overdo it, as quality can degrade quickly.

Feed Real Context into Prompts. Include actual details or relevant excerpts to ground the response and help the AI avoid generic, surface-level output.

Always Edit Thoroughly. Read drafts aloud. Rewrite anything that feels off or doesn't sound like you. Inject your personality, specific insights, and unique quirks. This human editing step is crucial.

Bottom Line: Keep Your Voice Front and Center

AI gets smarter every day, but if you want your words to stand out from all those beige paragraphs out there, you need more than just hitting "generate." Authentic communication still relies heavily on human judgment and personality.

Use these strategies to keep your voice clear, even when starting with AI-generated drafts. Genuine human writing remains your best competitive edge.

And when I asked AI to validate if this article sounded like AI, it completely missed the joke